Last friday I visited Fei-Fei Li’s Vision Lab at Stanford University and I had the pleasure of giving a very informal talk on our ongoing works on social media annotation. The slides of the talk are available online.

Our paper entitled “Enriching and Localizing Semantic Tags in Internet Videos” has been accepted by ACM Multimedia 2011.

Tagging of multimedia content is becoming more and more widespread as web 2.0 sites, like Flickr and Facebook for images, YouTube and Vimeo for videos, have popularized tagging functionalities among their users. These user-generated tags are used to retrieve multimedia content, and to ease browsing and exploration of media collections, e.g. using tag clouds. However, not all media are equally tagged by users: using the current browsers is easy to tag a single photo, and even tagging a part of a photo, like a face, has become common in sites like Flickr and Facebook; on the other hand tagging a video sequence is more complicated and time consuming, so that users just tend to tag the overall content of a video.

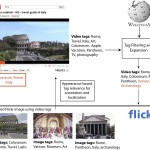

In this paper we present a system for automatic video annotation that increases the number of tags originally provided by users, and localizes them temporally, associating tags to shots. This approach exploits collective knowledge embedded in tags and Wikipedia, and visual similarity of keyframes and images uploaded to social sites like YouTube and Flickr. Our paper is now available online.

Our paper “Event detection and recognition for semantic annotation of video” was accepted for publication by Springer International Journal of Multimedia Tools and Applications (MTAP) in the special issue “Survey papers in Multimedia by World Experts”. The paper is available online now (see publications) and it is also available on SpringerLink (DOI).

In this paper we survey the field of event recognition, from interest point detectors and descriptors, to event modelling techniques and knowledge management technologies. We provide an overview of the methods, categorising them according to video production methods and video domains, and according to types of events and actions that are typical of these domains.

Our paper on “Tag suggestion and localization in user-generated videos based on social knowledge” won the best paper award at ACM SIGMM Workshop on Social Media (WSM’10) in conjunction with ACM Multimedia 2010.

Nowadays, almost any web site that provides means for sharing user-generated multimedia content, like Flickr, Facebook, YouTube and Vimeo, has tagging functionalities to let users annotate the material that they want to share. The tags are then used to retrieve the uploaded content, and to ease browsing and exploration of these collections, e.g. using tag clouds. However, while tagging a single image is straightforward, and sites like Flickr and Facebook allow also to tag easily portions of the uploaded photos, tagging a video sequence is more cumbersome, so that users just tend to tag the overall content of a video. While research on image tagging has received a considerable attention in the latest years, there are still very few works that address the problem of automatically assigning tags to videos, locating them temporally within the video sequence.

In this paper we present a system for video tag suggestion and temporal localization based on collective knowledge and visual similarity of frames. The algorithm suggests new tags that can be associated to a given keyframe exploiting the tags associated to videos and images uploaded to social sites like YouTube and Flickr and visual features. Our paper is now available online.

Our paper on “Video Annotation and Retrieval Using Ontologies and Rule Learning” was accepted for publication by the International IEEE MultiMedia Magazine.

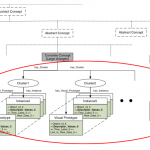

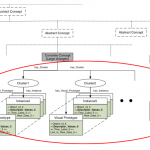

In this paper we present an approach for automatic annotation and retrieval of video content, based on ontologies and semantic concept classifiers. A novel rule-based method is used to describe and recognize composite concepts and events. Our algorithm learns automatically rules expressed in Semantic Web Rules Language (SWRL), exploiting the knowledge embedded into the ontology. Concepts’ relationship of co-occurrence and the temporal consistency of video data are used to improve the performance of individual concept detectors. Finally, we present a web video search engine, based on ontologies, that permits queries using a composition of boolean and temporal relations between concepts.

Our paper on “Semantic annotation of soccer videos by visual instance clustering and spatial/temporal reasoning in ontologies” was accepted for publication by Springer International Journal on Multimedia Tools and Applications (MTAP).

In this paper we present a framework for semantic annotation of soccer videos that exploits an ontology model referred to as Dynamic Pictorially Enriched Ontology, where the ontology, defined using OWL, includes both schema and data. Visual instances are used as matching references for the visual descriptors of the entities to be annotated. The paper is available online now and it is also available on SpringerLink in the “Online First” section (DOI).