Last friday I visited Fei-Fei Li’s Vision Lab at Stanford University and I had the pleasure of giving a very informal talk on our ongoing works on social media annotation. The slides of the talk are available online.

Our ICME 2013 paper “An evaluation of nearest-neighbor methods for tag refinement” by Tiberio Uricchio, Lamberto Ballan, Marco Bertini and Alberto Del Bimbo is now available online.

Our ICME 2013 paper “An evaluation of nearest-neighbor methods for tag refinement” by Tiberio Uricchio, Lamberto Ballan, Marco Bertini and Alberto Del Bimbo is now available online.

The success of media sharing and social networks has led to the availability of extremely large quantities of images that are tagged by users. The need of methods to manage efficiently and effectively the combination of media and metadata poses significant challenges. In particular, automatic image annotation of social images has become an important research topic for the multimedia community. In this paper we propose and thoroughly evaluate the use of nearest-neighbor methods for tag refinement and we report an extensive and rigorous evaluation using two standard large-scale datasets.

Andy Bagdanov and I are organizing a paper reading group on Multimedia and Vision at the MICC of University of Florence.

Andy Bagdanov and I are organizing a paper reading group on Multimedia and Vision at the MICC of University of Florence.

We plan a meeting once every three weeks (approximately), usually from 12.00 to 13.30. The schedule of our meetings and the material are available on this page.

Our paper “Copy-Move Forgery Detection and Localization by Means of Robust Clustering with J-Linkage” by I. Amerini, L. Ballan, R. Caldelli, A. Del Bimbo, L. Del Tongo, and G. Serra, has been accepted for publication by the Signal Processing: Image Communication journal (pdf, link); more info on this page.

Our paper “Copy-Move Forgery Detection and Localization by Means of Robust Clustering with J-Linkage” by I. Amerini, L. Ballan, R. Caldelli, A. Del Bimbo, L. Del Tongo, and G. Serra, has been accepted for publication by the Signal Processing: Image Communication journal (pdf, link); more info on this page.

Understanding if a digital image is authentic or not, is a key purpose of image forensics. There are several different tampering attacks but, surely, one of the most common and immediate one is copy-move. A recent and effective approach for detecting copy-move forgeries is to use local visual features such as SIFT. Often, this procedure could be unsatisfactory, in particular in those cases in which the copied patch contains pixels that are spatially distant, and when the pasted area is near to the original source. In such cases, a better estimation of the cloned area is necessary in order to obtain an accurate forgery localization. We present a novel approach for copy-move forgery detection and localization based on J-Linkage which performs a robust clustering in the space of the geometric transformation.

Our paper entitled “Context-Dependent Logo Matching and Recognition” – by H. Sahbi, L. Ballan, G. Serra and A. Del Bimbo – has been accepted for publication in the IEEE Transactions on Image Processing (pdf, link). Part of this work was conducted while me and G. Serra were visiting scholars at Telecom ParisTech (in spring 2010).

Our paper entitled “Context-Dependent Logo Matching and Recognition” – by H. Sahbi, L. Ballan, G. Serra and A. Del Bimbo – has been accepted for publication in the IEEE Transactions on Image Processing (pdf, link). Part of this work was conducted while me and G. Serra were visiting scholars at Telecom ParisTech (in spring 2010).

We contribute through this paper to the design of a novel variational framework able to match and recognize multiple instances of multiple reference logos in image archives. Reference logos as well as test images, are seen as constellations of local features (interest points, regions, etc.) and matched by minimizing an energy function mixing (i) a fidelity term that measures the quality of feature matching (ii) a neighborhood criterion which captures feature co-occurrence/geometry and (iii) a regularization term that controls the smoothness of the matching solution. We also introduce a detection/recognition procedure and we study its theoretical consistency. We show the validity of our method through extensive experiments on the novel challenging MICC-Logos dataset overtaking, by 20%, baseline as well as state-of-the-art matching/recognition procedures. We present also results on another public dataset, the FlickrLogos-27 image collection, to demonstrate the generality of our method.

Our paper “Combining Generative and Discriminative Models for Classifying Social Images from 101 Object Categories” has been accepted at ICPR’12. We use a hybrid generative-discriminative approach (LDA + SVM with non-linear kernels) over several visual descriptors (SIFT, GIST, colorSIFT).

Our paper “Combining Generative and Discriminative Models for Classifying Social Images from 101 Object Categories” has been accepted at ICPR’12. We use a hybrid generative-discriminative approach (LDA + SVM with non-linear kernels) over several visual descriptors (SIFT, GIST, colorSIFT).

A major contribution of our work is also the introduction of a novel dataset, called MICC-Flickr101, based on the popular Caltech 101 and collected from Flickr. We demonstrate the effectiveness and efficiency of our method testing it on both datasets, and we evaluate the impact of combining image features and tags for object recognition.

I am co-organizer (with Marco Bertini, Alex Berg and Cees Snoek) of the International Workshop on Web-scale Vision and Social Media, in conjunction with ECCV 2012.

I am co-organizer (with Marco Bertini, Alex Berg and Cees Snoek) of the International Workshop on Web-scale Vision and Social Media, in conjunction with ECCV 2012.

The world-wide-web has become a large ecosystem that reaches billions of users through information processing and sharing, and most of this information resides in pixels. Web-based services like YouTube and Flickr, and social networks such as Facebook have become more and more popular, allowing people to easily upload, share and annotate massive amounts of images and videos all over the web.

Vision and social media thus has recently become a very active inter-disciplinary research area, involving computer vision, multimedia, machine-learning, information retrieval, and data mining. This workshop aims to bring together leading researchers in the related fields to advocate and promote new research directions for problems involving vision and social media, such as large-scale visual content analysis, search and mining.

Our paper entitled “Effective Codebooks for Human Action Representation and Classification in Unconstrained Videos” by L. Ballan, M. Bertini, A. Del Bimbo, L. Seidenari and G. Serra has been accepted for publication in the IEEE Transactions on Multimedia.

Recognition and classification of human actions for annotation of unconstrained video sequences has proven to be challenging because of the variations in the environment, appearance of actors, modalities in which the same action is performed by different persons, speed and duration and points of view from which the event is observed. This variability reflects in the difficulty of defining effective descriptors and deriving appropriate and effective codebooks for action categorization.

In this paper we propose a novel and effective solution to classify human actions in unconstrained videos. It improves on previous contributions through the definition of a novel local descriptor that uses image gradient and optic flow to respectively model the appearance and motion of human actions at interest point regions. In the formation of the codebook we employ radius-based clustering with soft assignment in order to create a rich vocabulary that may account for the high variability of human actions. We show that our solution scores very good performance with no need of parameter tuning. We also show that a strong reduction of computation time can be obtained by applying codebook size reduction with Deep Belief Networks with little loss of accuracy.

Our method has obtained very competitive performance on several popular action-recognition datasets such as KTH (accuracy = 92.7%), Weizmann (accuracy = 95.4%) and Hollywood-2 (mAP = 0.451).

I am involved in the local committee of ECCV 2012. A year from now, we will host in Florence the 12th European Conference on Computer Vision. ECCV has an established tradition of high scientific quality, with double blind reviewing and very low acceptance rates (about 5% for orals and 25% for posters in 2010). The conference has an overall duration of one week. The main conference has a duration of four days starting from the second and a single-track format, with about ten oral presentations and one poster session per day. Tutorials are held on the first day, and Workshops on the last two days. Industrial exhibits and Demo sessions are also scheduled in the conference programme.

I am involved in the local committee of ECCV 2012. A year from now, we will host in Florence the 12th European Conference on Computer Vision. ECCV has an established tradition of high scientific quality, with double blind reviewing and very low acceptance rates (about 5% for orals and 25% for posters in 2010). The conference has an overall duration of one week. The main conference has a duration of four days starting from the second and a single-track format, with about ten oral presentations and one poster session per day. Tutorials are held on the first day, and Workshops on the last two days. Industrial exhibits and Demo sessions are also scheduled in the conference programme.

ECCV 2012 will be held in Florence, Italy, on October 7-13, 2012. Visit ECCV 2012 site.

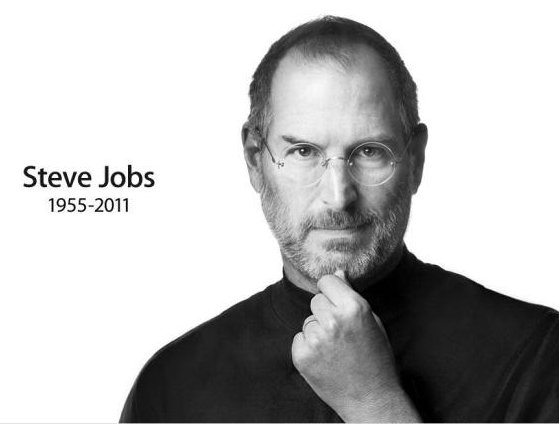

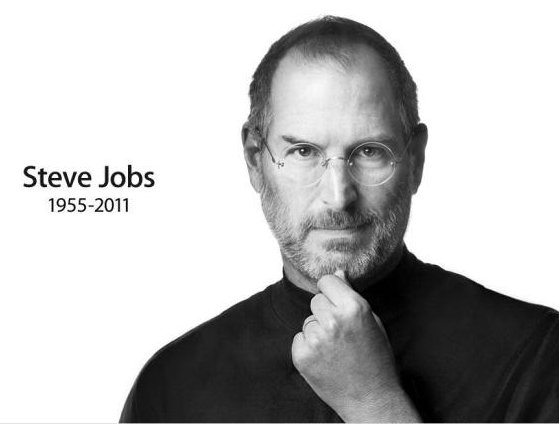

Thanks Steve, RIP.

“When I was young, there was an amazing publication called The Whole Earth Catalog, which was one of the bibles of my generation. It was created by a fellow named Stewart Brand not far from here in Menlo Park, and he brought it to life with his poetic touch. […] It was the mid-1970s, and I was your age. On the back cover of their final issue was a photograph of an early morning country road, the kind you might find yourself hitchhiking on if you were so adventurous. Beneath it were the words: Stay Hungry. Stay Foolish. It was their farewell message as they signed off. Stay Hungry. Stay Foolish. And I have always wished that for myself. And now, as you graduate to begin anew, I wish that for you. Stay Hungry. Stay Foolish.” Steve Jobs, Stanford University, 12th of June 2005 (link to the video)

“When I was young, there was an amazing publication called The Whole Earth Catalog, which was one of the bibles of my generation. It was created by a fellow named Stewart Brand not far from here in Menlo Park, and he brought it to life with his poetic touch. […] It was the mid-1970s, and I was your age. On the back cover of their final issue was a photograph of an early morning country road, the kind you might find yourself hitchhiking on if you were so adventurous. Beneath it were the words: Stay Hungry. Stay Foolish. It was their farewell message as they signed off. Stay Hungry. Stay Foolish. And I have always wished that for myself. And now, as you graduate to begin anew, I wish that for you. Stay Hungry. Stay Foolish.” Steve Jobs, Stanford University, 12th of June 2005 (link to the video)